ROS 2 Navigation

1. Introduction

The goal of this tutorial is

to use the ROS 2 navigation capabilities to move the robot autonomously.

The packages you will use:

workshop_ros2_navigation

Lines beginning with # indicates the syntax of these commands.

Commands are executed in a terminal:

Open a new terminal → use the shortcut ctrl+alt+t.

Open a new tab inside an existing terminal → use the shortcut ctrl+shift+t.

Lines beginning with

$indicates the syntax of these commands.The computer of the real robot will be accessed from your local computer remotely. For every further command, a tag will inform which computer has to be used. It can be either

[TurtleBot]or[Remote PC].

You can use the given links for further information.

2. General Approach

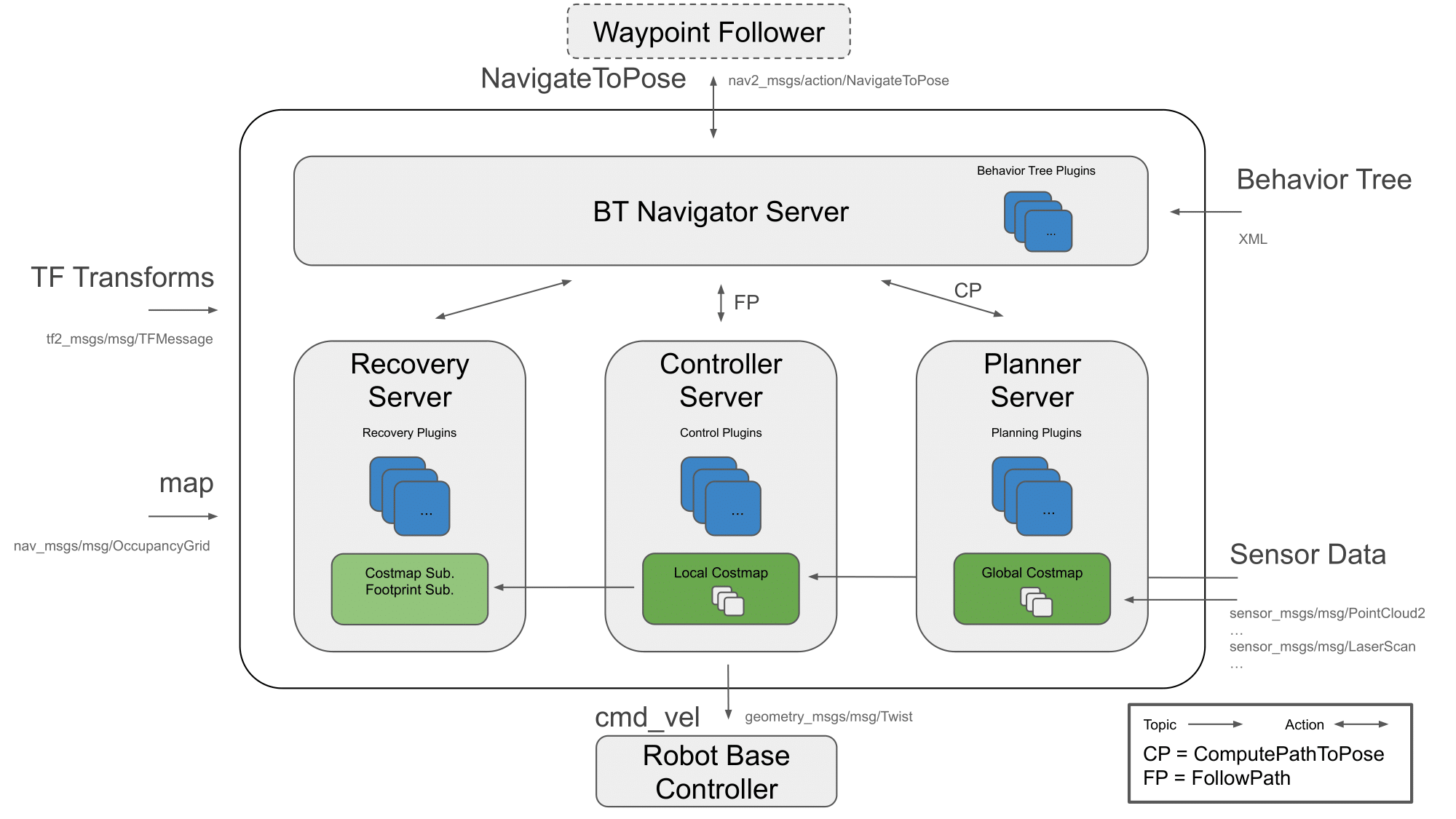

The ROS 2 Navigation System is the control system that enables a robot to autonomously reach a goal state, such as a specific position and orientation relative to a specific map. Given a current pose, a map, and a goal, such as a destination pose, the navigation system generates a plan to reach the goal, and outputs commands to autonomously drive the robot, respecting any safety constraints and avoiding obstacles encountered along the way.

It consists of several ROS components. An overview of its interactions is depicted in the following picture:

navigation_overview

navigation_overview

Figure 1: Navigation2 Architecture

source: navigataion

3. Launch the navigation stack

3.1. Check the map

Check the location of your map

Once you create a map, you will have two files: “name of your map”.pgm and “name of your map”.yaml

For example, a workspace has the following layout:

nav_ws/ maps/ my_map.yaml my_map.pgm src/ build/ install/ log/Check if the location of “name of your map”.pgm in “name of your map”.yaml is right

For example, the context of “my_map.yaml” is as followed:

# my_map.yaml image: ./my_map.pgm resolution: 0.050000 origin: [-10.000000, -10.000000, 0.000000] negate: 0 occupied_thresh: 0.65 free_thresh: 0.196

Hint: make sure that there is the right path of my_map.pgm in my_map.yaml. The path of “my_map.png” in “my_map.yaml” is relative. So if “my_map.yaml” and “my_map.png” are in the same folder, the parameter of “image” should be “image: ./my_map.pgm”

3.2. Start the simulated robot

Open a terminal

Set ROS environment variables

First you need to go into your workspace and source your workspace:

$ source install/setup.bashSet up Gazebo model path:

$ export GAZEBO_MODEL_PATH=`ros2 pkg \ prefix turtlebot3_gazebo`/share/turtlebot3_gazebo/models/

set up the robot model that you will use:

$ export TURTLEBOT3_MODEL=burger

Bring up Turtlebot in simulation

# in the same terminal, run $ ros2 launch turtlebot3_gazebo turtlebot3_world.launch.pyStart navigation stack

Open another terminal, source your workspace, set up the robot model that you will use, then

$ ros2 launch turtlebot3_navigation2 \ navigation2.launch.py \ use_sim_time:=true map:=maps/"you map name".yaml

The path of the map is relative to the place where you will run this command.

You also can use this command to check which parameter that you can define:

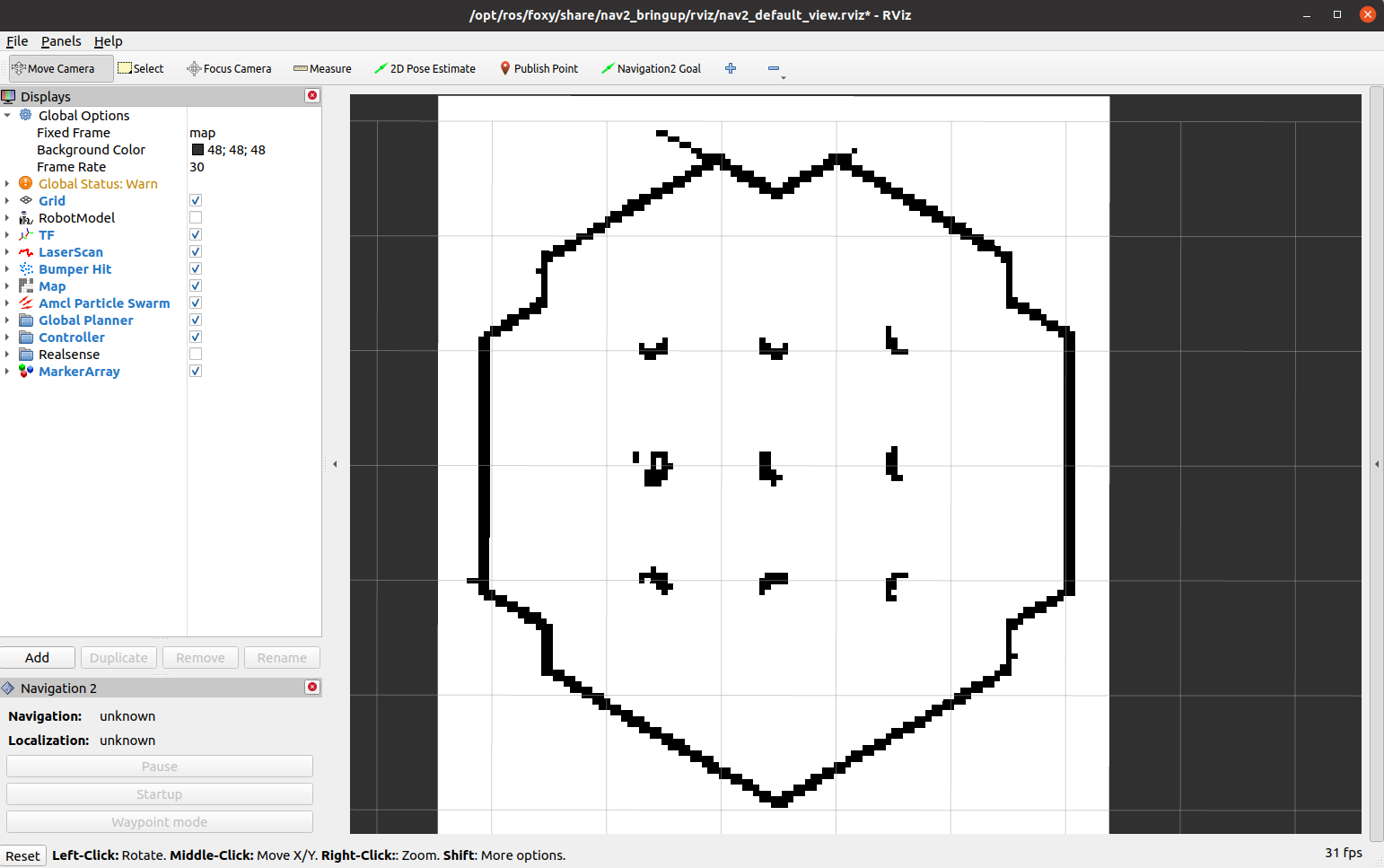

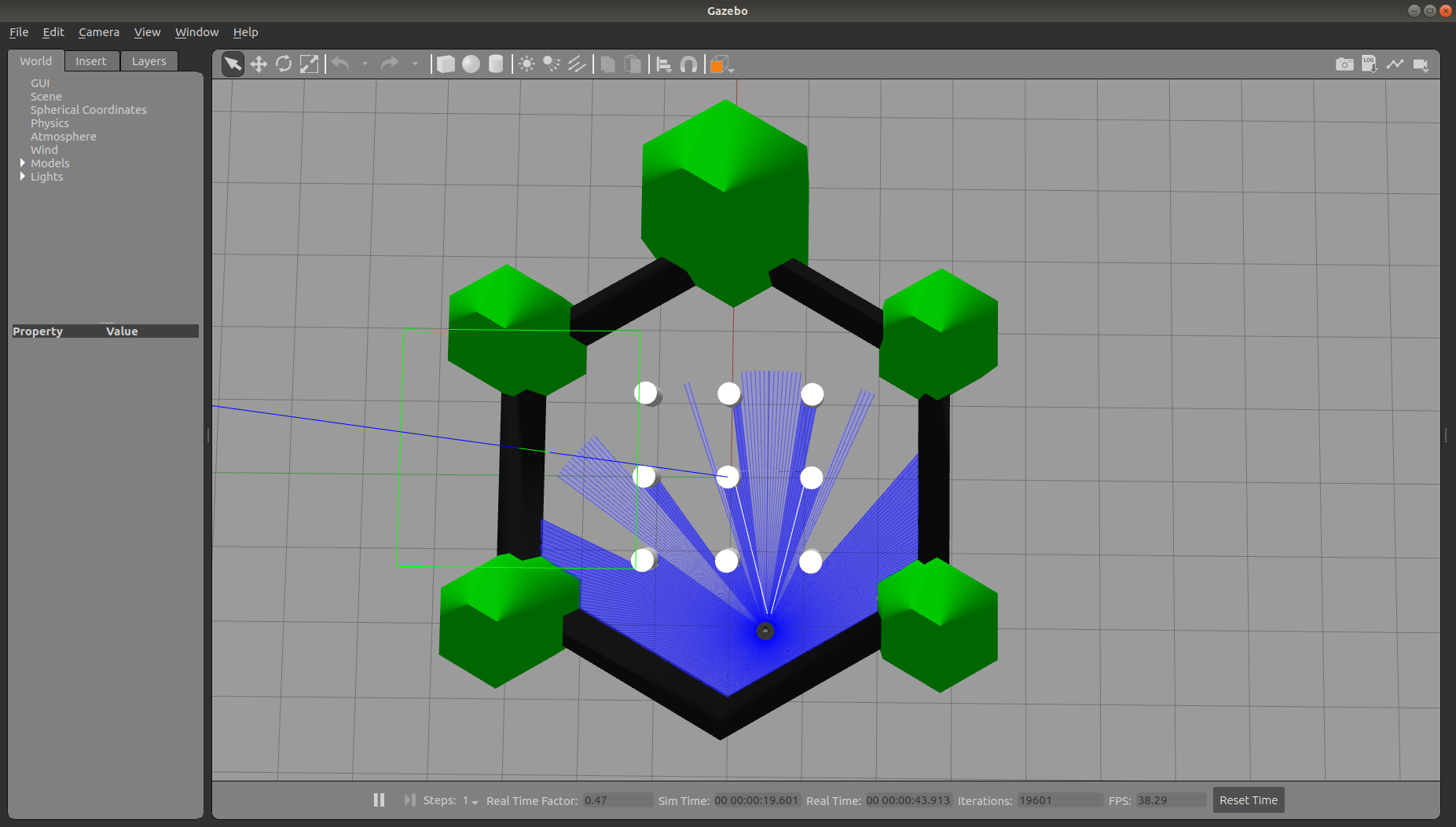

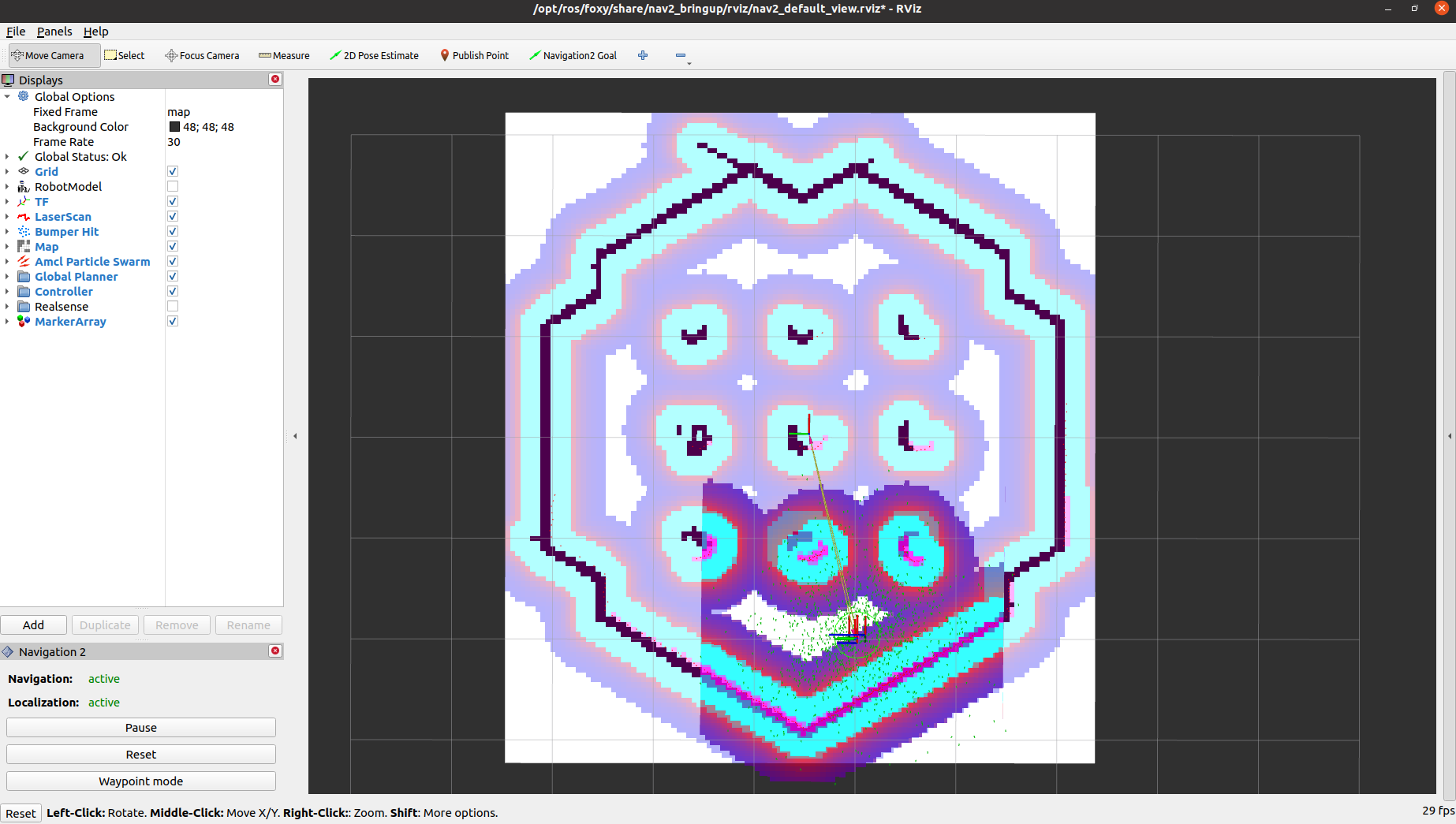

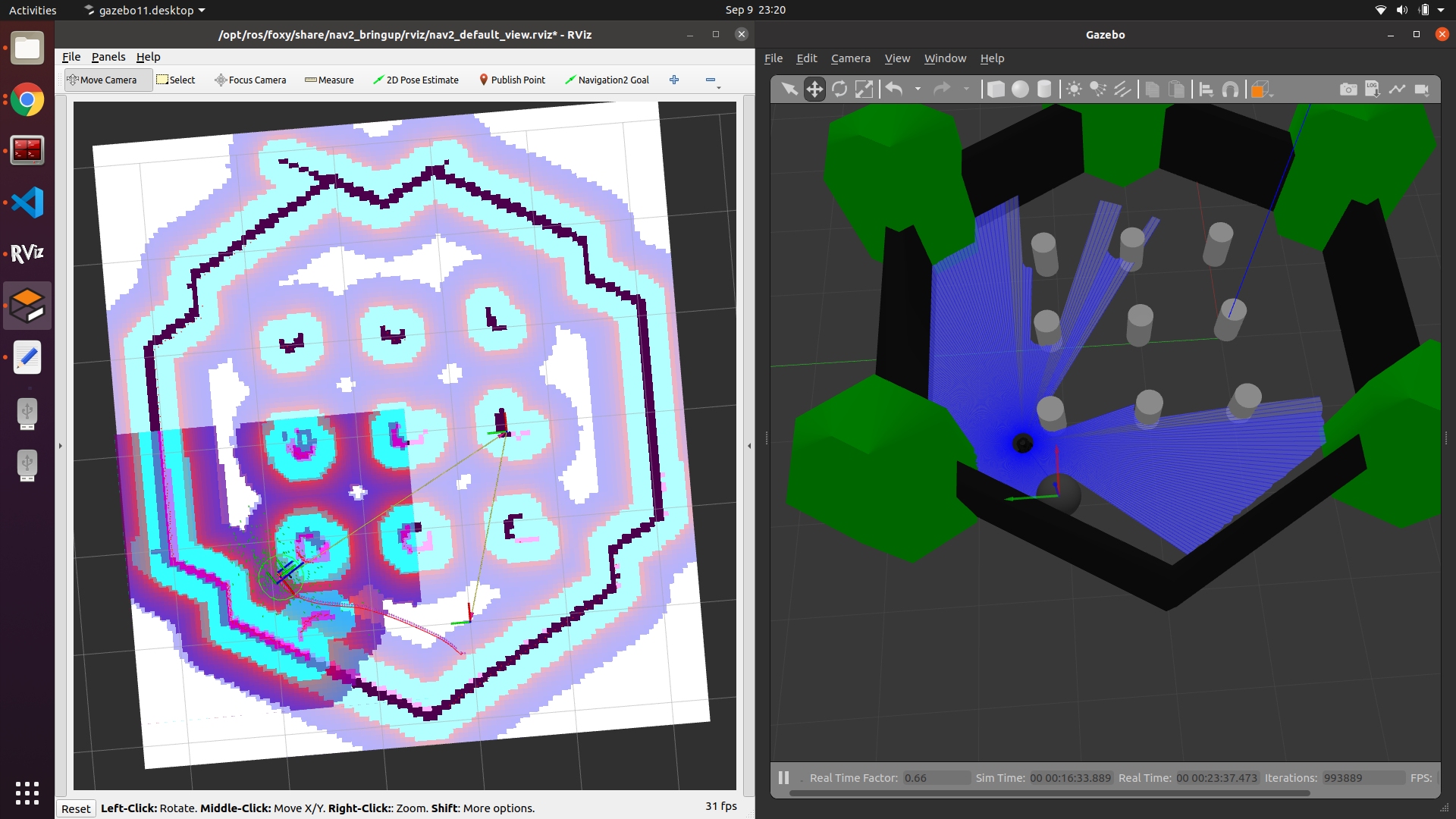

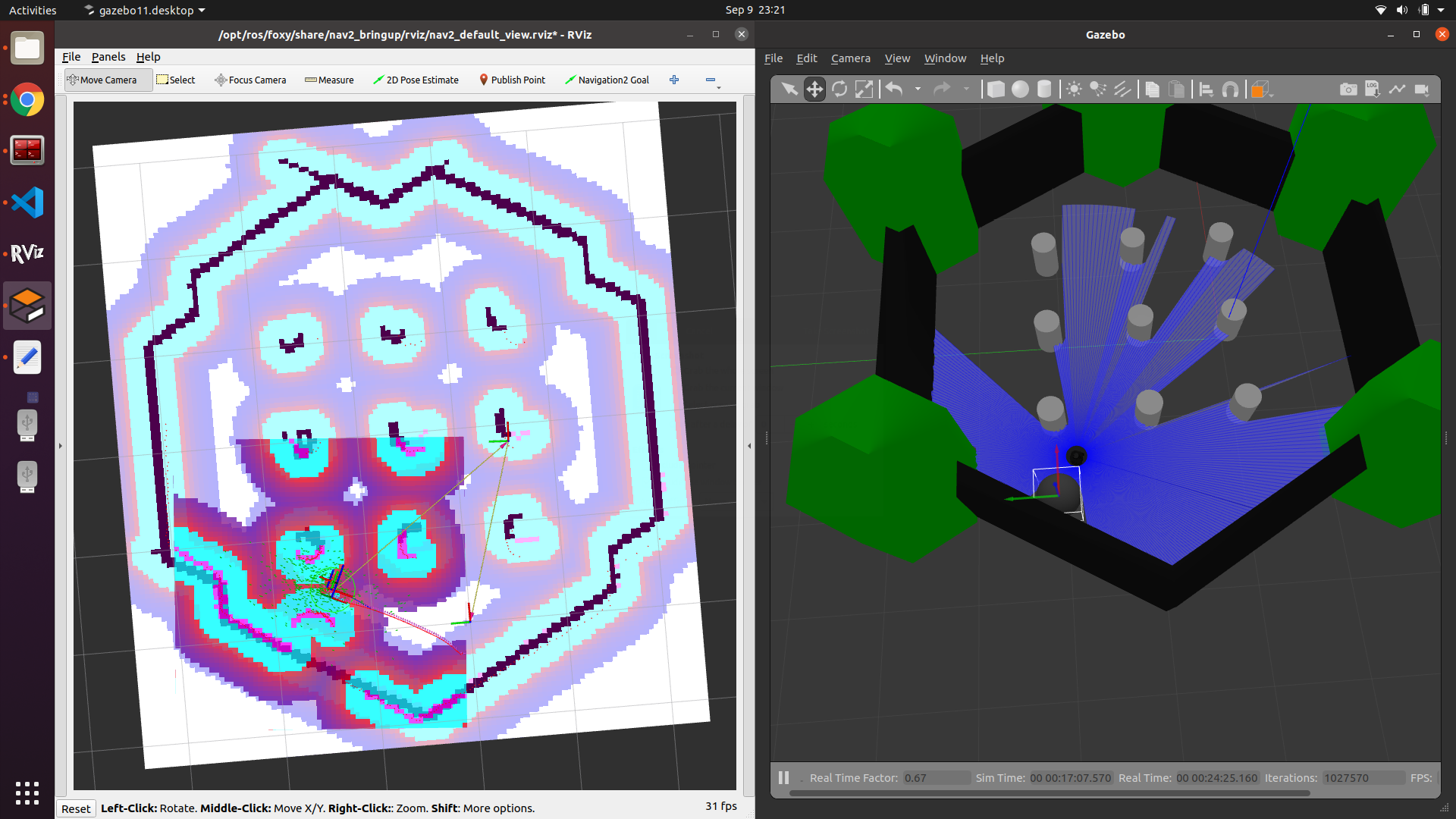

ros2 launch turtlebot3_navigation2 navigation2.launch.py \ --show-argsIf everything has started correctly, you will see the RViz and Gazebo GUIs like this.

rviz_navigation_1

rviz_navigation_1 gazebo_navigation_1

gazebo_navigation_1

3.3. Start with a physicals robot

Open a terminal on TurtleBot3.

Bring up basic packages to start TurtleBot3 applications.

[TurtleBot3]

$ source .bashrc

$ ros2 launch turtlebot3_bringup robot.launch.py

[Remote PC]

$ cd "your workspace"

$ source install/setup.bash

$ export ROS_DOMAIN_ID =

"same as ROS DOMAIN ID of the turtlebot you are using"

$ ros2 launch turtlebot3_navigation2 navigation2.launch.py\

map:=maps/map.yaml

4. Navigate the robot via rviz

Step 1: Tell the robot where it is

after starting, the robot initially has no idea where it is. By default, Navigation 2 waits for you to give it an approximate starting position.

It has to manually update the initial location and orientation of the TurtleBot3. This information is applied to the AMCL algorithm.

This can be done graphically with RViz by the instruction below:

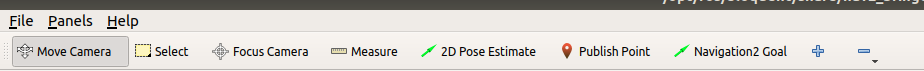

rviz_initial

rviz_initialClick “2D Pose Estimate” button (in the top menu; see the picture)

Click on the approximate point in the map where the TurtleBot3 is located and drag the cursor to indicate the direction where TurtleBot3 faces.

If you don’t get the location exactly right, that’s fine. Navigation 2 will refine the position as it navigates. You can also, click the “2D Pose Estimate” button and try again, if you prefer.

Once you’ve set the initial pose, the tf tree will be complete and Navigation 2 is fully active and ready to go.

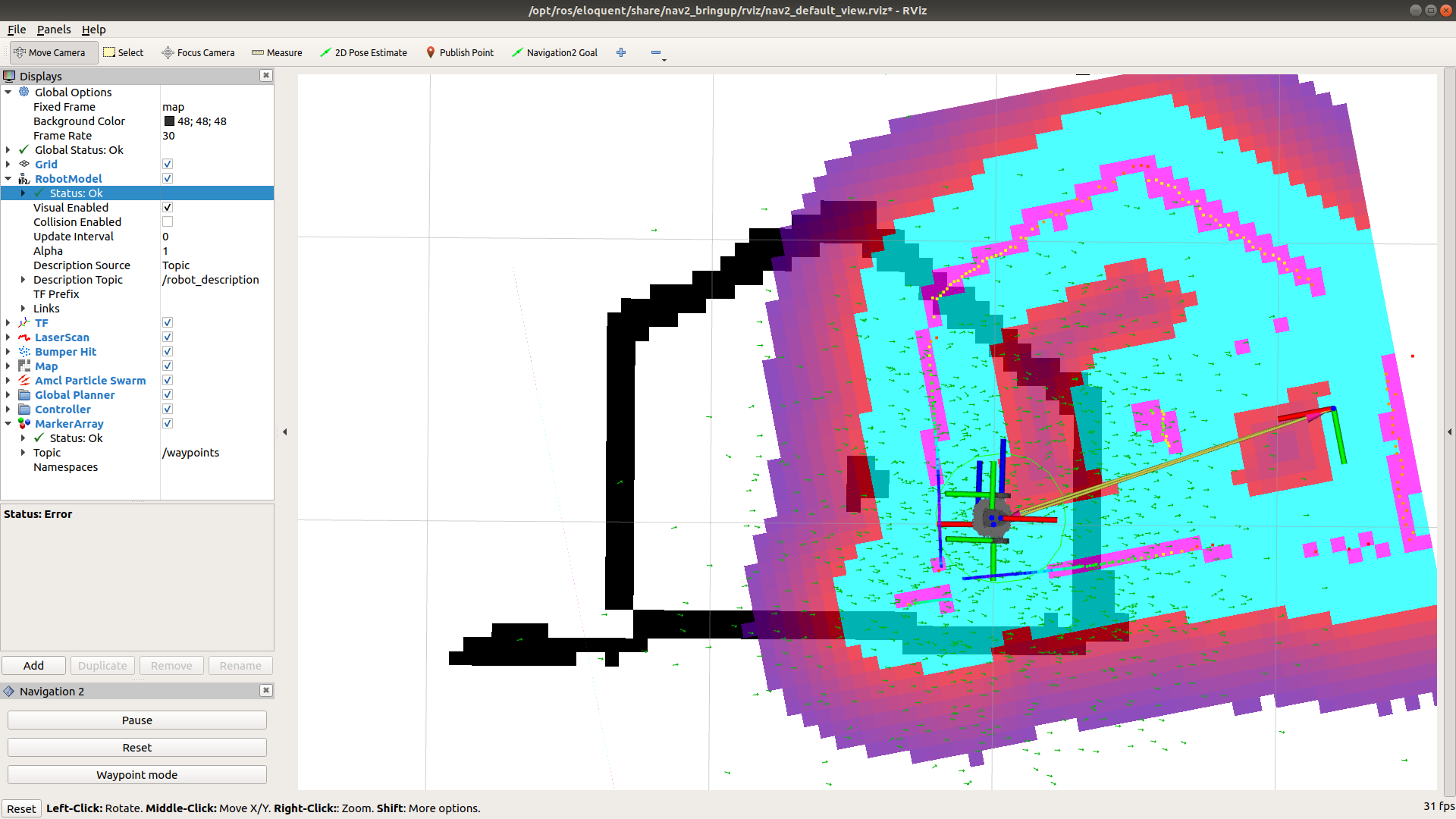

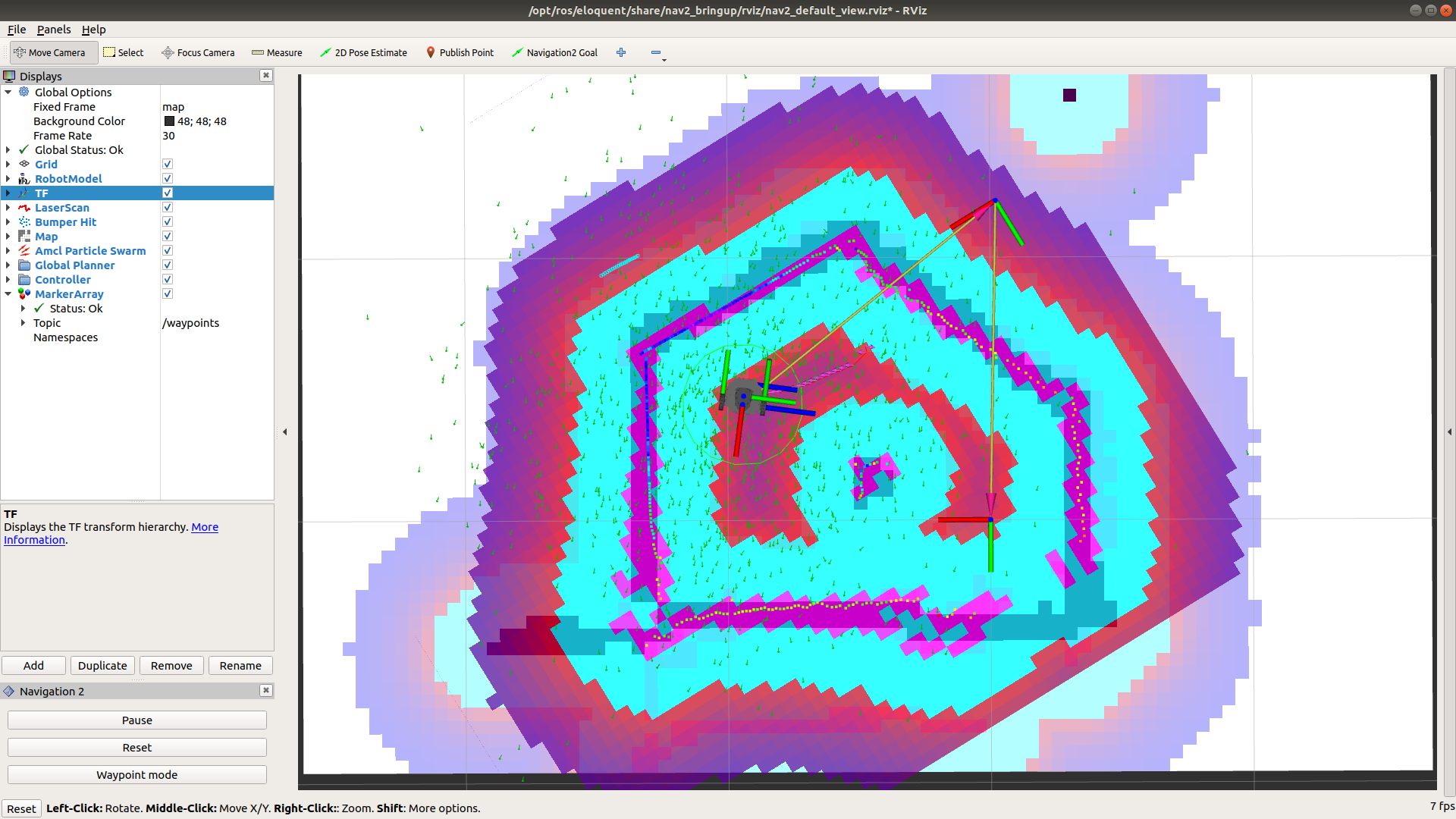

If you are using simulation with turtlrbot_world map, it will show as below:

Navigation_is_ready_sim

Navigation_is_ready_simAs soon as the 2D Pose Estimation arrow is drawn, the pose (transformation from the map to the robot) will update. As a result the centre of the laser scan has changed, too. Check if the visualization of the live laser scan matches the contours of the virtual map (Illustrated in the following two pictures! The left one is the wrong robot pose and the right one is right robot pose) to confirm that the new starting pose is accurate.

bad

bad good

goodStep 2: Give a goal

if the TurtleBot3 is localized, it can automatically create a path from the current position to any target reachable position on the map. In order to set a goal position, follow the instruction below:

Click the 2D Nav Goal button (also in the top menu)

Click on a specific point in the map to set a goal position and drag the cursor to the direction where TurtleBot should be facing at the end

Hint: If you wish to stop the robot before it reaches to the goal position, set the current position of TurtleBot3 as a goal position.

The swarm of the green small arrows is the visualization of the adaptive Monte Carlo localization (AMCL). Every green arrow stands for a possible position and orientation of the TurtleBot3. Notice that in the beginning its distribution is spread over the whole map. As soon as the robot moves the arrows get updated because the algorithm incorporates new measurements. During the movement the distribution of arrows becomes less chaotic and settles more and more to the robot’s location, which finally means that the algorithm becomes more and more certain about the pose of the robot in the map.

Step 3: Play aroundWhen the robot is on the way to the goal, you can put an obstacle in Gazebo:

nav_goal_ob_1

nav_goal_ob_1You can see how the robot reacts to this kind of situation:

nav_goal_ob_2

nav_goal_ob_2It depends on how you design the behavior tree structure. The one we are using is “navigate_w_replanning_and_recovery.xml” You can find in this link